Labscape is an interactive system that uses cameras and microphones as sensors for tracking human activity in architectural spaces, and use the data retrieved to affect the behavior of generated graphical landscapes. The dimensions and movement of these data-nourished ecosystems, is a function of the measure of sound, movement and light present in the different spaces under "passive surveillance" around the building.

The project is currently developed at ISNM (International School of New Media), with collaboration of other members of the school. The idea is to explore dimensions of organic interaction between humans and computer systems, using control and surveillance technologies. What if the eye-machine, or the almighty sensor network that expand nowadays, could be a receptacle or a platform for a new kind of context-aware ecosystem?

Technical description

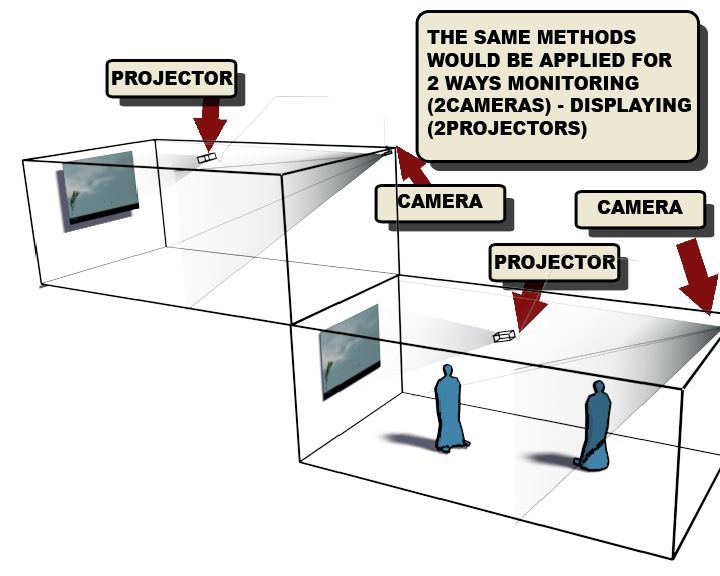

The system is composed by a simple networked application, specially designed to write and receive data values to and from remote locations.

For the prototype, we have just a webcam tracking the average movement at each space by means of a basic glob tracking algorithm implemented in the processing environment. The data captured is used locally and sent to a remote client, and, in both sides, a simple Linden-Mayer algorithm is used to draw a set of plants an control their behavior, which is affected by the data being captured in both spaces under surveillance. In the next versions we aim to implement the use of microphones, light sensors and temperature sensors.

Required equipment.

2 webcams 2 Windows PC, with: Windows XP Professional operating system At least 2.6 GHz CPU, with 512MB RAM At least 3 USB slots 1 Video Projector. 8 microphones 4 light sensors 2 temperature sensors